AI or simple logical business: the art of relevance in technical choices

- barbarela48

- Apr 30

- 3 min read

Have you ever wondered about the sometimes problematic practices in the world of data science and artificial intelligence? I offer an in-depth analysis that sheds light on the underlying issues.

The Overuse of the Term "AI": A Marketing and Methodological Trap

The term "AI" has become a sort of magic word in the tech world, used to capture the attention of decision-makers or to embellish technical solutions, even when they are not based on actual artificial intelligence techniques. This leads to what is called **AI-washing**: an artificial inflation of the capabilities of a product or project by attributing it a complexity or advancement that does not correspond to reality.

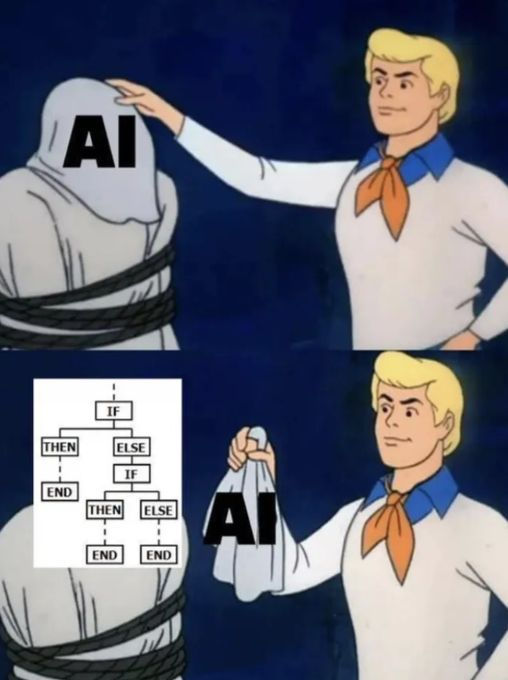

The example of the meme below is a humorous nod, but it highlights a real problem: **many so-called "AI" solutions rely only on basic rules, often coded with simple structures (if/else statements, regex, or rudimentary decision trees)**. Of course, this doesn't detract from their usefulness in certain contexts; but it does raise questions about intellectual honesty and clarity in technical choices.

The Dangers of Poorly Calibrated Use of AI

Indeed, the ill-considered use of AI techniques can lead to three major problems:

- Expectations Mismanagement: Decision-makers or end users, influenced by the marketing hype surrounding AI, often expect spectacular results. When their hopes clash with a deterministic solution disguised as AI, it can lead to long-term disappointment and damage trust in data teams. For example, an AI that was supposed to "predict human behavior" but only applies rigid rules risks leading to malfunctions and misunderstandings.

- Unnecessary system complexity: A machine learning model or a sophisticated framework is not necessarily the optimal solution when clear business logic would suffice. By opting for over-dimensioning, development becomes tedious, costly, and sometimes less robust than a simple, well-designed solution.

- Data team distraction: The "it's AI, so it's necessarily better" attitude often pushes technical teams to overinvest in ML solutions when a traditional system would have been sufficient. Meanwhile, fascinating questions or more complex issues for which AI would have been truly appropriate are sidelined.

A Praise for Well-Considered Simplicity

A good data scientist or data team—and by extension, a good decision-maker—must be able to:

🔹 Recognize situations where a simple solution effectively meets needs.

🔹 Identify cases where an AI or advanced approach is truly necessary and can create added value.

🔹 Know how to deconstruct the mechanisms behind innovation to remain transparent about the strengths and limitations of the proposed solutions.

Real-life example:

Consider a chatbot designed to help telecom customers. If 80% of customer queries concern recurring questions like "How do I check my bills?" or "How do I change my SIM card?", a set of simple rules based on keywords (using regex, for example) may be more than sufficient. Before integrating an advanced NLP model like GPT or BERT, the logic can be tested deterministically and the approach can be verified to deliver acceptable customer satisfaction.

However, if a more complex analysis is required—such as detecting ambiguous intentions expressed in unconventional sentences ("My network never works properly, I'm considering canceling")—advanced models may become essential.

Moral and Best Practices: When to Choose and When to Simplify

The key message here is an invitation to **demystify innovation**: the individual or team designing the solution must exercise discernment and honesty in their choices. Before diving into sometimes complex AI frameworks, it's wise to ask the following questions:

What is the real user/decision-maker need to solve?Are we primarily looking for speed and robustness, or are we trying to address a non-trivial problem?

Am I oversizing the solution to meet vague or marketing expectations?

If I choose AI, do I have the resources (time, expertise, data) to guarantee its success? If not, wouldn't deterministic solutions be more appropriate?

Is it possible to start with a simple MVP before adding complexity later if necessary and justified?

Combining pragmatism and innovation

I think it's important to remind all professionals in the data and AI world that sometimes, a good old "if/else" can be your best friend. The main objective remains "to create useful, effective, and tailored solutions" to the real needs of the people or organizations you want to serve.

We need to start from the basics: don't try to impress with technical terms or misleading labels, but rather aim for "relevance and simplicity as soon as possible." And if adding artificial intelligence becomes necessary later, only then consider increasing the complexity.

What do you think? 😊 Have you encountered situations where a simple system would have been sufficient, but AI was emphasized unnecessarily?

Comments